The Competitive Advantage of Proprietary AI

An interview with AI infrastructure expert Jeff Lundberg.

Written by David Baum | 5 min • April 09, 2025

The Competitive Advantage of Proprietary AI

An interview with AI infrastructure expert Jeff Lundberg.

Written by David Baum | 5 min • April 09, 2025

Artificial intelligence (AI) is transforming the way businesses operate, sparking both excitement and uncertainty. Some organizations are now turning to proprietary AI to gain more control over their AI-driven processes. However, with greater control comes greater responsibility—and a new set of challenges.

To break it all down, technology journalist David Baum sat down with Jeff Lundberg, principal product and solutions manager at Hitachi Vantara, to explore the ins and outs of proprietary AI—how it’s deployed, its risks, and the advantages it can bring to forward-thinking businesses.

David Baum: Set the stage for us: What is proprietary AI, and is it the same as private, on-prem, or self-hosted AI?

Jeff Lundberg: Proprietary enterprise AI generally refers to a closed-source AI model. Unlike open-source models—such as LLaMA, which anybody can download and modify—proprietary AI models are developed and maintained by a technology company that restricts access to the underlying code and architecture. For example, with OpenAI’s ChatGPT or Microsoft’s Copilot, you can interact with the AI but you don’t have full visibility into how it operates.

Proprietary AI isn’t necessarily tied to a specific deployment method — it’s general purpose.. It can run in the cloud, on-premises, or in a hybrid setup. Enterprises can even bring models like ChatGPT in-house, using a private instance while keeping interactions secure.

David Baum: What’s driving the shift toward proprietary, enterprise-grade AI? Is the main concern keeping enterprise data out of public or open source AI models?

Jeff Lundberg: Data security is the top concern. Companies are reluctant to feed sensitive information—like pricing or customer data—into public AI models due to risk of exposure. A lot of organizations have policies banning employees from entering proprietary data into tools like ChatGPT.

A proprietary approach is particularly useful for industries like finance or healthcare, where exposing external data could constitute a major risk. For instance, if a hospital uses AI for diagnostic analysis, they have to comply with HIPAA regulations.

Customization is another driver. A generic AI model might work well for broad tasks, but a proprietary model can be fine-tuned for industry-specific needs. For example, if you want to analyze ten years of internal product roadmaps, you need a model that has been trained on your data, not a generic dataset. Industries such as manufacturing, legal services, and scientific research are all applying AI to their unique data and workflows.

David Baum: How can organizations get started with an AI solution, and what type of expertise is required to develop custom capabilities?

Jeff Lundberg: Building and training an AI model from scratch requires massive resources, expertise, and infrastructure—something only tech giants like Meta or Tesla can easily afford. Training demands tens or even hundreds of thousands of GPUs, making it prohibitively expensive for most companies. The real challenge isn’t just deploying AI—it’s the ongoing cycle of training, retraining, and fine-tuning, all of which require significant computational power. For most businesses, fine-tuning an existing model with proprietary data is far more practical, requiring only a fraction of the resources compared to building one from the ground up. It's more cost-effective.

David Baum: Increasingly, we are seeing AI functions embedded in enterprise software like Oracle and Salesforce. How does that change enterprise AI strategies?

Jeff Lundberg: Embedded AI simplifies adoption. Companies no longer need to build AI from scratch—they can leverage AI capabilities integrated into tools they already use, like Salesforce, SAP, or Microsoft Copilot. However, you need to take a look at the data governance policies to ensure data isn’t being inadvertently shared or exposed. For example, a marketing team might access AI-generated product insights from Salesforce, but they need to make sure the AI doesn’t expose sensitive customer data like names and addresses. Poor governance could lead to compliance violations, such as sending out a mass email that breaches GDPR regulations.

David Baum: Just because your enterprise data is sequestered behind your firewall doesn’t mean every employee should be able to see all of it. Let’s talk more about data governance. What are the key constraints and issues?

Jeff Lundberg: Good data governance is all about balancing access with security. Just like with business intelligence or other types of data management initiatives, role-based access controls help ensure that employees can only access the information they need. For example, an HR executive might see detailed compensation data, but a junior HR rep should only see aggregate statistics. The same applies to AI models—you need to ensure the AI respects those permissions so sensitive data isn’t exposed to unauthorized users. Regular audits, AI monitoring tools, and data anonymization techniques help prevent unintended leaks.

Another key challenge is managing AI outputs, ensuring AI-generated insights don’t inadvertently expose confidential or regulated data.

Finally, organizations should have clear policies around training AI models—once an AI model is trained on proprietary data, it’s not easy to erase that knowledge, so proactive governance is essential.

David Baum: Can you share some other examples of how companies integrate proprietary AI into workflows?

Jeff Lundberg: Absolutely. Take a customer support department. If a representative is troubleshooting an issue, a proprietary AI—trained on decades of support tickets—can instantly suggest solutions based on past cases. In HR, an AI model can help employees navigate through the benefits-enrollment process by analyzing your personal data and recommending the best options. An AI model can also help automate data cleaning and preparation tasks for data science professionals so they can focus on more strategic work.

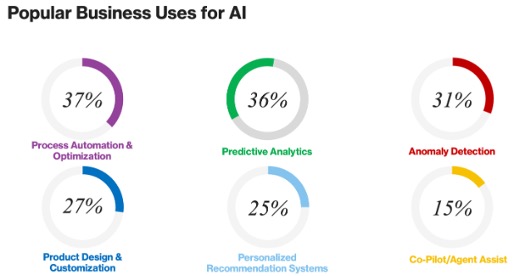

And there are loads of industry examples as well. In manufacturing, AI can process IoT sensor data to detect production defects in, say, a supply chain, or predict maintenance needs. In finance, proprietary AI can enhance fraud detection by analyzing patterns in customer transactions, reducing security risks. Retailers use proprietary AI models to predict inventory needs and prevent overstocking or shortages, leading to improved profitability. In e-commerce, AI can personalize customer interactions by analyzing purchasing behavior. According to our research, 44% of business leaders said that their companies are planning to implement data modernization efforts in 2024 to take better advantage of GenAI—for a wide variety of use cases (see figure).

David Baum: What are the top security risks for proprietary AI?

Jeff Lundberg: When it comes to cybersecurity, AI is a double-edged sword. On one hand, companies use AI to detect cyber threats and automate responses. On the other hand, cybercriminals are also leveraging AI to develop more sophisticated attacks. That brings us back to data governance: you need rigorous governance policies to prevent proprietary AI from storing or exposing confidential information.

Recent AI-generated cyberattacks, such as AI-powered voice cloning used in fraud cases, highlight the growing need for stringent security measures. AI models trained on proprietary data must be monitored to prevent adversarial attacks, where bad actors attempt to manipulate AI behavior by injecting malicious data. AI-driven phishing and deepfake scams are becoming more sophisticated, making cyber-defense strategies even more critical.

Looking ahead, quantum computing could introduce new security threats, since it has the potential to crack current encryption standards, which could make today’s security protocols obsolete.

David Baum: Final thoughts—what should enterprises keep in mind when adopting proprietary AI?

Jeff Lundberg: Proprietary AI offers more control, security, and customization—but it requires investment. Many businesses rely on service providers to offer a safe set of AI tools along with clear guidelines on their limitations. They can help you understand what data should and shouldn’t be fed into AI models, ensuring you don’t unintentionally expose sensitive information or create long-term risks.

If you are just starting out, leveraging proprietary AI within existing enterprise apps is the easiest path. If you want to do some custom development or roll out a more advanced AI strategy, having the right talent and resources is key. You also have to assess whether you have the infrastructure and expertise to run and tune the models. Not all companies have data scientists and AI developers on staff to undertake custom development projects.

David Baum: What’s the take home point for today’s enterprises?

Jeff Lundberg: AI is a brave new world that many organizations are still trying to navigate. The value lies in how well it’s integrated into your workflows and business objectives. With a proprietary AI system, the heavy lifting has been done for you. You don’t have to train a model or hire a bunch of data, scientists. You need a small group of people who can maintain the infrastructure, but that can be acquired through partnership.

Ultimately, enterprises that thoughtfully implement proprietary AI will gain a competitive edge.