Green IT is a Business Strategy

How AI leaders can tackle the sustainability paradox.

Written by Jimmy Oribiana | 5 min • October 09, 2025

Green IT is a Business Strategy

How AI leaders can tackle the sustainability paradox.

Written by Jimmy Oribiana | 5 min • October 09, 2025

Life gives us lessons that teach us about our complex environment. For instance, a paradox teaches us that life is often more complex and nuanced than it appears on the surface. It challenges our conventional thinking and encourages us to look beyond the obvious.

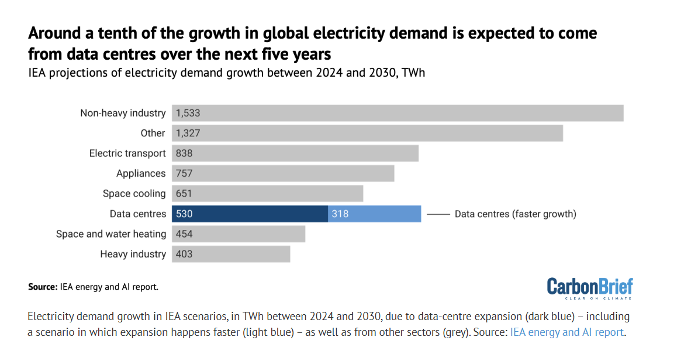

One paradox for AI leaders is hiding in plain sight: the systems we build to forecast demand, balance grids, and route supply chains are leaning ever harder on the same power and water we’re trying to conserve. This is the sustainability paradox. The very technology that is being built is consuming the most electricity.

This year, the largest hyperscalers are in the middle of an unprecedented buildout: Amazon is on pace to invest roughly $100 billion toward AI infrastructure; Microsoft will spend about $80 billion; and Alphabet will allocate about $85 billion to expand servers and data centers for AI workloads. Industry trackers now estimate total hyperscaler capital spending in 2025 in the $392 billion range, with worldwide data center capex up more than 50% year-over-year in Q1 alone as next‑gen GPU clusters come online.

Aside from the dollar cost to the business, there are also geopolitical reasons to secure energy. Nations leading in AI will need secure, abundant energy. This could intensify competition for renewables, nuclear, and rare earth resources. If energy scarcity drives AI concentration in a few countries, it could deepen global inequality and digital divides.

So where does that leave leaders who need AI to deliver outcomes without compounding the problem? The answer isn’t far-off or a visionary gamble — its about making deliberate, practical choices. It’s operational discipline.

There are a few actions any AI leader can take now.

First, time non‑urgent workloads for cleaner power so emissions fall without sacrificing results. Second, set lifecycle emissions budgets for models the way you set spend caps, making trade‑offs explicit up front. Third, make GreenOps a standing team sport—shared metrics, shared incentives, visible dashboards. Fourth, choose regions and architectures that ease water stress, not just latency. Fifth, make impact visible by default with sustainability tagging in pipelines and products, so carbon and water show up next to cost and performance.

The paradox doesn’t vanish completely by integrating these actions into a larger sustainability strategy, but it becomes manageable—and progress becomes measurable. If we treat carbon, water, and energy as first‑class metrics alongside latency and accuracy, we’ll ship systems that earn trust while sharpening performance and resilience.

We need to look closer at how AI workloads are executed. From training complex neural networks to deploying real-time inference systems, these workloads demand significant computational resources. Every model iteration, every batch of data processed, and every inference call consumes energy—often at a scale that’s invisible to end users but deeply impactful in aggregate. This raises an essential question: how and when should we choose to run these workloads? This can make a substantial difference for sustainability.

Leaders and practitioners should consider running AI workloads when the grid is greenest. Most AI workloads are scheduled based on availability of compute resources or business urgency. But what if we scheduled them based on carbon intensity? For ROI-focused leaders, carbon-aware scheduling is a cost-and-risk lever, not a trade-off. Proven implementations show that shifting flexible workloads to cleaner, often off-peak windows lowers marginal energy costs and demand charges while cutting emissions that may soon carry explicit costs.

For example, Google’s carbon-intelligent platform already shifts non-urgent jobs without violating service level objectives (SLO), even supporting demand response during grid stress. Microsoft’s Azure pilots demonstrate measurable Software Carbon Intensity reductions by time-shifting batch jobs using carbon-intensity signals.

CASPER is a scheduling system that redirects traffic from high carbon regions to low-carbon and regions and determines the right amount of servers to provision. This has achieved up to ~70% emissions reductions while meeting latency targets, validating feasibility for production-grade services.

Encourage finance to treat carbon like currency, approve emissions like spend, and manage tradeoffs. There are strategies for reducing the carbon emissions associated with machine learning (ML) that involve setting limits for carbon emissions for different stages of the project (training, tuning, and serving ML models). In software development, “Pull Requets (PRs)” are used to propose changes to code base. PR templates can include estimates for metrics such as cost per run, energy consumption, carbon emissions, and water usage.

Tools like CodeCarbon estimate the carbon dioxide emissions produced by machine learning experiments, tracking power usage and mapping it to the real-time carbon intensity of the electricity grid. These are lines of code that can be added to track your metrics.

GreenOps are cross-functional teams that take ownership of executing sustainability goals and achieving operational efficiencies, cost savings, and enhanced corporate social responsibility. Some areas where GreenOps can perform:

There are already great examples out there of where GreenOps has executed. For example, UPS teamed with Google Cloud to develop route optimization software, resulting in annual savings of $400 million and a reduction of fuel consumption by 10 million gallons. Microsoft’s GreenOps teams optimize energy and water use in their global data centers, using AI-driven workload scheduling, closed-loop cooling systems, and real-time carbon tracking to help the company pursue its carbon-negative goal by 2030.

Despite growing interest, GreenOps remains uncommon in practice—especially across traditional, asset-heavy industries where sustainability responsibilities are fragmented. But, when sustainability is everyone’s job, it becomes no one’s burden.

Choose cloud regions with lower water stress. This industry talks a lot about carbon, but water is the real hidden cost of AI.

AI data centers use millions of gallons of water for cooling. Water-aware deployment means choosing regions with lower water stress for inference-heavy workloads.

In 2024, hyperscale water use hit headline scale—Google and Microsoft data centers alone drew nearly 6 billion gallons, roughly the annual irrigation of about 40 golf courses—while a single 100MW facility using evaporative cooling can consume 500–700 million gallons a year, comparable to the needs of tens of thousands of residents.

Cluster a few sites in one metro — and withdrawals can push into the tens of billions of gallons, competing with municipal and industrial users in water‑stressed regions.

The fix for this is operational, not theoretical:

Make environmental impact visible in your codebase. What gets measured gets managed—and what gets tagged gets noticed.

Sustainability tagging involves adding metadata to models and pipelines that track energy, carbon, and water usage. Teams can create tagging systems in GitHub or GitLab, include sustainability notes in model cards, and use badges or dashboards to visualize impact across projects.

Open-source projects like the Sustainable AI Energy Consumption Prediction Model are leading the way. This builds awareness, encourages accountability, and helps teams learn from each other.

The AI sustainability paradox isn’t going away. But we can choose how we respond to it. These five ideas—carbon-aware scheduling, emissions budgeting, GreenOps, water-aware deployment, and sustainability tagging—are ideas for AI leaders that are practical and ready to be put in-place today. If we collectively make it our problem, we can solve it together.

If your AI strategy doesn’t include sustainability, is it really intelligent?